Mastering Deep Sort: The Future of Object Tracking Explained

Deep SORT (Simple Online and Realtime Tracking with a Deep Association Metric) improves upon the original SORT (Simple Real-time Tracker) algorithm by introducing a deep association metric that uses deep learning techniques to better handle occlusions and different viewpoints. This allows the algorithm to learn and understand the visual appearance of objects in a more robust and accurate manner, leading to improved tracking performance in challenging scenarios.By incorporating deep learning into the tracking process, Deep SORT is able to effectively handle complex tracking scenarios that traditional methods struggle with. This makes it a valuable tool for a wide range of applications, including surveillance, autonomous vehicles, and sports analytics.Overall, Deep SORT represents a significant advancement in the field of object tracking, demonstrating the potential of deep learning to enhance the capabilities of existing tracking algorithms in real-world settings. Its ability to accurately track objects in challenging scenarios makes it a valuable tool for a wide range of practical applications.

The article goes into detail about Deep SORT, highlighting its pivotal role in multi-object tracking (MOT).

It also offers a detailed tutorial that guides through integrating Deep SORT with state-of-the-art object detection algorithms, supplemented with clear Python code illustrations.

Multi-object tracking (MOT) refers to the process of locating and following multiple objects in a video or image sequence over time. This is a crucial task in computer vision and surveillance systems, as it enables understanding the movements and interactions of multiple objects simultaneously. The goal of multi-object tracking is to generate trajectories for each object in the scene and maintain their identities across frames, even when they may be occluded or temporarily disappear from view. To achieve this, multi-object tracking algorithms typically use a combination of object detection, data association, and trajectory prediction techniques. These algorithms must also address challenges such as handling occlusions, maintaining object identities in crowded scenes, and adapting to changes in object appearance or motion. Multi-object tracking has numerous applications in various fields, including autonomous vehicles, human activity analysis, crowd monitoring, and object recognition. Overall, multi-object tracking plays a vital role in enabling machines to understand and interpret complex dynamic environments.

The SORT algorithm is the foundation of the DeepSORT (Deep Learning for Multiple Object Tracking) system, which is a state-of-the-art approach for tracking multiple objects in a video. The SORT algorithm, short for Simple Online and Realtime Tracking, is a simple yet effective algorithm for performing data association and track initiation in a multi-object tracking scenario. It first uses a detection-based approach to associate objects in consecutive frames, and then employs a Kalman filter to predict the future position of the objects and correct the association based on the actual measurements. This algorithm has been widely used due to its efficiency and accuracy, and serves as the basis for the more advanced DeepSORT system, which enhances the data association process using deep learning techniques to further improve the tracking performance. By combining the strengths of the SORT algorithm with deep learning, DeepSORT has achieved state-of-the-art performance in multiple object tracking tasks.

Deep SORT (Simple Online and Realtime Tracking) is an algorithm used for multi-object tracking in video streams. It is an extension of the SORT (Simple Online and Realtime Tracking) algorithm, which uses the Kalman filter for object tracking. Deep SORT incorporates a deep association metric based on appearance features learned by a deep convolutional neural network. This allows the algorithm to handle situations where objects may temporarily disappear or become occluded in the video stream. Deep SORT also incorporates ID assignment to track individual objects across multiple frames, which is crucial for applications such as surveillance, autonomous vehicles, and human-computer interaction. The algorithm performs a two-stage approach of first generating object detections and then associating those detections to existing tracks. Overall, Deep SORT has shown significant improvements in tracking accuracy and robustness compared to traditional tracking algorithms, making it a valuable tool in computer vision and artificial intelligence applications.

Deep SORT is made of 4 key components which are as follows:

Deep SORT starts with object detection, often using a convolutional neural network (CNN) like YOLO (You Only Look Once) to identify objects within a frame. Each detection is associated with a high-dimensional appearance descriptor extracted by another CNN. These descriptors encode the appearance of the detected objects and are used for matching.

Similar to SORT, Deep SORT uses a Kalman filter for state prediction. The state of an object typically includes its position, velocity, and acceleration. The Kalman filter predicts the state of each object in the current frame based on its last known state, accounting for the object's motion dynamics.

Data association in Deep SORT is where it significantly deviates from its predecessor. Instead of relying solely on IoU, it employs a combination of motion information and appearance features.

The Hungarian algorithm performs matching based on a cost matrix that considers both the Mahalanobis distance for motion consistency and the cosine distance for appearance similarity. The use of a deep association metric allows Deep SORT to continue tracking through short periods of occlusion.

Deep SORT manages track lifecycles using several heuristics. It introduces concepts such as track confirmation, where a track is only confirmed after being detected in several consecutive frames, thereby reducing false positives. There is also an age parameter to remove old tracks that have not been detected recently, ensuring the system only maintains active tracks.

Deep SORT's integration of appearance features with motion information provides several advantages:

Deep SORT's applications span a variety of fields:

Using the Ikomia API, you can effortlessly create a workflow object detection and tracking in just a few lines of code.

To get started, you need to install the API in a virtual environment [2].

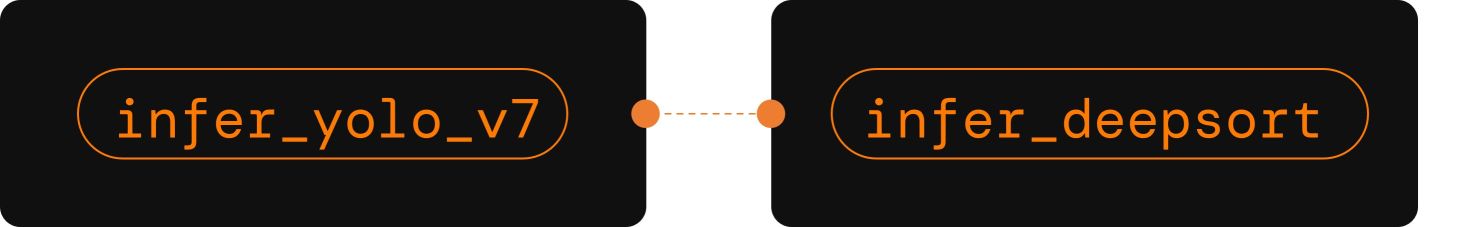

Here we run the following workflow:

First, we apply YOLOv7 for object detection and then track the detected objects using the Deep SORT algorithm. To process a video, just update the 'input_video_path' variable with the path of your video file.

You can also directly charge the notebook we have prepared.

You can set the Deep SORT parameters by editing the following section.

In this tutorial, we've taken a dive into the world of object detection and tracking using YOLOv7 and Deep SORT.

But there's more! With Ikomia HUB, you've got access to plenty of ready-to-use algorithms that can help you take your project to the next level. You've got the power to pick your favorite object detection model and create a workflow that's tailor-made for your needs. And why not try out some other object tracking algorithms like ByteTrack?

If are looking for more information on the API, our detailed documentation has got you covered. Or, if you'd rather learn by doing, Ikomia STUDIO is the perfect place start experimenting without code but with all the bells and whistles our API has to offer.

[1] Real Time Pear Fruit Detection and Counting Using YOLOv4 Models and Deep SORT - doi.org/10.3390/s21144803