Easy text extraction using MMOCR: A Comprehensive Guide

MMOCR shines as a top-tier Optical Character Recognition (OCR) toolbox, especially within the Python community. For those unfamiliar with the platform, navigating through its documentation and installation steps can be somewhat daunting.

In this article, we'll guide you step-by-step, highlighting the essentials and addressing potential challenges of using the MMOCR inferencer, a specialized wrapper for OCR tasks.

Then, we'll present a simplified method to harness MMOCR's capabilities via the Ikomia API.

Dive in and elevate your OCR projects!

Optical Character Recognition (OCR) technology has come a long way. From the early days of digitizing printed text on paper to the modern applications that can read text from almost any surface, OCR has emerged as a pivotal element in the advancement of information processing.

One of the latest advancements in this domain is MMOCR—a comprehensive toolset for OCR tasks.

MMOCR is an open-source OCR toolbox based on PyTorch and is an integral component of the OpenMMLab initiative.

OpenMMLab is known for its commitment to pioneering Computer Vision tools such as MMDetection, and MMOCR is their answer to the growing demands of the OCR community.

The toolbox offers a variety of algorithms for different OCR tasks, including text detection, recognition, and key information extraction.

MMOCR, backed by the extensive resources and expertise of OpenMMLab, brings a multitude of features to the table. Let's explore some of the standout attributes:

As of September 2023, MMOCR supports 8 state-of-the-art algorithms for text detection and 9 for text recognition. This ensures users have the flexibility to choose the best approach for their specific use case.

MMOCR is designed with modularity in mind. This means users can easily integrate different components from various algorithms to create a custom OCR solution tailored to their needs.

For users who need a comprehensive solution, MMOCR offers end-to-end OCR systems that combine both text detection and recognition.

MMOCR is not just about inference. The toolbox provides utilities for training new models, as well as benchmarking them against standard datasets.

Being part of the MMDetection ecosystem, MMOCR benefits from a robust community of researchers and developers. This ensures regular updates, improvements, and access to the latest advancements in the field.

The flexibility and power of MMOCR make it suitable for a range of applications:

For this section, we will navigate through the MMOCR documentation for text extraction from documents. Before jumping in, we recommend that you review the entire process as we encountered some steps that were problematic.

OpenMMLab suggests specific Python and PyTorch versions for optimal results:

For this demonstration, we used a Windows setup.

Setting up a working environment begins with creating a Python virtual environment and then installing the Torch dependencies.

Though the prerequisites suggest Python 3.7, the code example provided in MMOCR documentation employs Python 3.8. We chose to follow the recommended Python version:

If you're unfamiliar with virtual environments, here's a guide on setting one up.

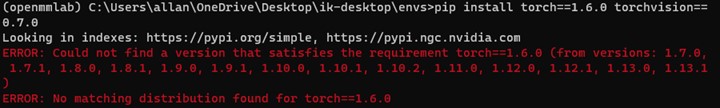

After activating the 'openmmlab' virtual environment, we proceed to install the PyTorch dependencies:

However, we encountered an error, as pip could not locate a compatible distribution.

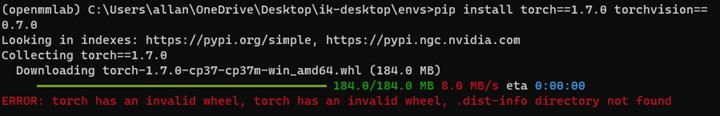

This attempt was met with the 'invalid wheel' error.

To resolve this, we consulted the official PyTorch documentation, which suggested the following versions: torch 1.7.1 and torchvision 0.8.2. However, the torchvision version recommended by the documentation, 0.7.0, is not compatible with torch 1.7.1.

Then the weinstall the following dependencies: MMEngine, MMCV and MMDetection using MIM.

While 'openmim' and 'mmengine' installed smoothly, the'mmcv' installation was prolonged, taking about 35 minutes.

Subsequently, we installed 'mmocr' from the source, as per the recommendation:

We evaluated our setup using a sample image, applying DBNet for text detection and CRNN for text recognition:

To evaluate MMOCR's performance further, we executed OCR on an invoice image using the DBNetpp model for text detection and ABINet_Vision for text recognition:

MMOCRInferencer serves as a user-friendly interface for OCR, integrating text detection and text recognition. From the initial setup to obtaining inference results, the entire process took approximately 50 minutes. Notably, the 'mmcv' compilation and installation took up a significant chunk of this time.

Given the numerous dependencies required to run MMOCR, we encountered outdated dependencies and subsequent conflicts—issues all too familiar to Python developers.

As library versions rapidly evolve, the challenges we encountered here may change, potentially improving or worsening in the coming weeks or months.

In the following section, we'll demonstrate how to simplify the installation and usage of MMOCR via the Ikomia API, significantly reducing both the steps and time needed to execute your OCR tasks.

With the Ikomia team, we've been working on a prototyping tool to avoid and speed up tedious installation and testing phases.

We wrapped it in an open source Python API. Now we're going to explain how to use it to extract text with MMOCR in less than 10 minutes instead of 50.

If you have any questions, please join our Discord.

As usual, we will use a virtual environment.

Then the only thing you need to install is Ikomia API:

You can also charge directly the open-source notebook we have prepared.

By default this workflow uses DBNet for text detection and SATRN for text recognition.

To adjust the algorithm parameters, consult the HUB pages, a multi-framework library documenting all available algorithms in Ikomia API:

- infer_mmlab_text_recognition

To carry out OCR, we simply installed Ikomia and ran the workflow code snippets. All dependencies were seamlessly handled in the background. By using a pre-compiled mmcv. We progressed from setting up a virtual environment to obtaining results in approximately 8 minutes.

For those of you who prefer working with a user interface, the Ikomia API is also available as a desktop app called STUDIO. The functioning and results are the same as with the API, with a drag and drop interface.

Real-world text extraction applications often necessitate a broader spectrum of algorithms beyond just text detection and extraction. This includes tasks like document detection/segmentation, rotation, and key information extraction.

One of the standout benefits of the API, aside from simplifying dependency installations, is its innate ability to seamlessly interlink algorithms from diverse frameworks, including frameworks like OpenMMLab, YOLO, Hugging Face, and Detectron2.

Deployment is often a big hurdle for Python developers. To bridge this gap, we introduce SCALE, a user-centric SaaS platform. SCALE is designed to deploy all your Computer Vision endeavors, eliminating the need for specialized MLOps knowledge.