Grounding DINO: Leading the Way in Zero-Shot Object Detection

The field of Computer Vision has witnessed significant advancements in recent years, especially in object detection tasks. Traditional object detection models require substantial amounts of labeled data for training, making them expensive and time-consuming.

However, the emergence of zero-shot object detection models promises to overcome these limitations and generalize to unseen objects with minimal data.

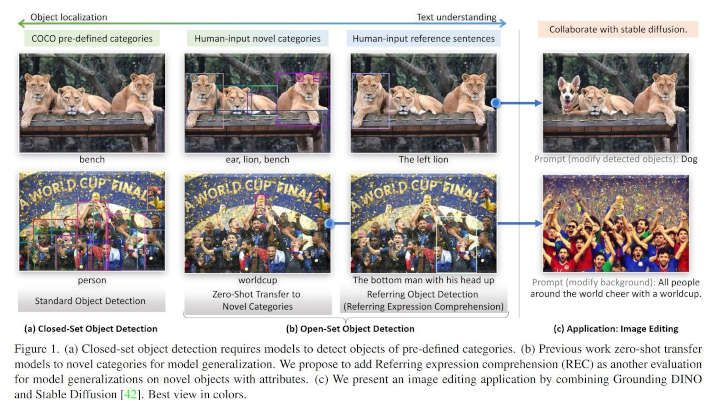

Grounding DINO is a cutting-edge zero-shot object detection model that marries the powerful DINO architecture with grounded pre-training. Developed by IDEA-Research, Grounding DINO can detect arbitrary objects based on human inputs, such as category names or referring expressions.

GroundingDINO is built on top of the DINO model, a transformer-based architecture well-known for its success in image classification and object detection tasks. The novel addition to GroundingDINO is the grounding module, which facilitates the relationship between language and visual content.

During training, the grounding module processes a dataset comprising images and corresponding text descriptions. The model learns to associate words in text descriptions with specific regions in images. This enables Grounding DINO to identify and detect objects in unseen images, even without any prior knowledge of those objects.

Grounding DINO showcases impressive performance in various zero-shot object detection benchmarks, including COCO, LVIS, ODinW, and RefCOCO/+/g. Notably, it achieves an Average Precision (AP) score of 52.5 on the COCO detection zero-shot transfer benchmark. Additionally, Grounding DINO sets a new record on the ODinW zero-shot benchmark, boasting a mean AP.

Grounding DINO stands out in the realm of zero-shot object detection by excelling at identifying objects that are not part of the predefined set of classes in the training data. This unique capability allows the model to adapt to novel objects and scenarios, making it highly versatile and applicable to a wide range of real-world tasks.

Unlike traditional object detection models that require exhaustive training on labeled data, Grounding DINO can generalize to new objects without the need for additional training, significantly reducing the data collection and annotation efforts.

One of the impressive features of Grounding DINO is its ability to perform Referring Expression Comprehension (REC). This means that the model can localize and identify a specific object or region within an image based on a given textual description.

For example, instead of merely detecting people and chairs in an image, the model can be prompted to detect only those chairs where a person is sitting. This requires the model to possess a deep understanding of both language and visual content and the ability to associate words or phrases with corresponding visual elements.

This capability opens up exciting possibilities for natural language-based interactions with the model, making it more intuitive and user-friendly for various applications.

Grounding DINO simplifies the object detection pipeline by eliminating the need for hand-designed components, such as Non-Maximum Suppression (NMS). NMS is a common technique used to suppress duplicate bounding box predictions and retain only the most accurate ones.

By integrating this process directly into the model architecture, Grounding DINO streamlines the training and inference processes, improving efficiency and overall performance.

This not only reduces the complexity of the model but also enhances its robustness and reliability, making it a more effective and efficient solution for object detection tasks.

While GroundingDINO shows great potential, its accuracy may not yet match that of traditional object detection models, like YOLOv7 or YOLOv8. Moreover, the model relies on a significant amount of text data for training the grounding module, which can be a limitation in certain scenarios.

As the technology behind Grounding DINO evolves, it is likely to become more accurate and versatile, making it an even more powerful tool for various real-world applications.

Grounding DINO represents a significant step forward in the field of zero-shot object detection. By marrying the DINO model with grounded pre-training, it achieves impressive results in detecting arbitrary objects with limited data. Its ability to understand the relationship between language and visual content opens up exciting possibilities for various applications.

As researchers continue to refine and expand the capabilities of Grounding DINO, we can expect this model to become an essential asset in the world of Computer Vision, enabling us to identify and detect objects in previously unexplored scenarios with ease.

In this section, we will cover the necessary steps to run the Grounding DINO object detection model.

You can also charge directly the open-source notebook we have prepared.

In this section, we use the Ikomia API, which streamlines the development of Computer Vision workflows and offers a straightforward method to test various parameters for optimal outcomes.

With the Ikomia API, creating a workflow using GroundingDINO for object detection becomes effortless, requiring only a few lines of code.

To get started, you need to install the API in a virtual environment.

How to install a virtual environment

In this section, we will demonstrate how to utilize the Ikomia API to create a workflow for object detection with Grouding DINO as presented above.

We initialize a workflow instance. The “wf” object can then be used to add tasks to the workflow instance, configure their parameters, and run them on input data.

You can apply the workflow to your image using the ‘run_on()’ function. In this example, we use the image url:

Finally, you can display our image results using the display function:

Here are some more GroundingDINO inference using different prompts:

In this tutorial, we have explored the process of creating a workflow for object detection with Grounding DINO.

The Ikomia API simplifies the development of Computer Vision workflows and allows for easy experimentation with different parameters to achieve optimal results.

To learn more about the API, please refer to the documentation. You may also check out the list of state-of-the-art algorithms on Ikomia HUB and try out Ikomia STUDIO, which offers a friendly UI with the same features as the API.

(1) Photo by Feyza Yıldırım: 'people-sitting-at-the-table-on-an-outdoor-restaurant-patio' - Pexels.