Understanding ResNeXt: Revolutionizing Convolutional Neural Networks

Building upon the successes of its predecessor, ResNet, ResNeXt introduces a novel approach to convolutional neural networks (CNNs) that enhances their performance and efficiency.

This post delves into the core concepts of ResNeXt, its unique features, and its implications in the field of AI and deep learning.

ResNeXt stands for Residual Networks with Aggregated Transformations. It's a type of CNN that was introduced to address some of the limitations found in traditional CNN architectures.

The key innovation in ResNeXt is its use of "cardinality" – the size of the set of transformations – as a new dimension, along with depth and width, for scaling up neural networks.

The ResNeXt model, unveiled in the 2016 paper 'Aggregated Residual Transformations for Deep Neural Networks' by Kaiming He and colleagues, blends the layer-stacking approach of VGG/ResNet with Inception's split-transform-merge strategy.

Its primary aim is to enhance performance without increasing computational depth (more computation):

In ResNeXt, cardinality refers to the number refers to the number of parallel transformation paths within a network layer.

This concept introduces a new dimension for scaling the complexity of the network, different from the traditional methods of increasing the depth (number of layers) or width (number of units per layer).

Cardinality allows for more diverse and rich feature transformations without significantly complicating the network's structure.

The architecture employs a strategy where the input is first split into multiple ‘parallel’ paths. Each path undergoes a different transformation but with shared parameters. Finally, the outputs of these paths are aggregated (merged).

This strategy enhances the learning capability of the network without a significant increase in complexity.

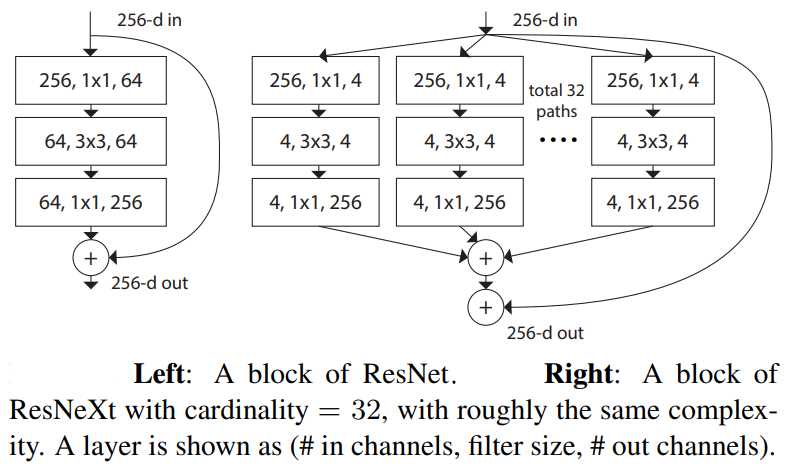

In the illustration above, the architecture on the left represents ResNet, while on the right, you see ResNeXt. Both these networks utilize the split-transform-merge strategy.

This approach initially divides the input into lower dimensions through a 1x1 convolutional layer, then applies transformations using 3x3 convolutional filters, and finally integrates the outputs through a summation operation.

The key aspect of this strategy is that the transformations are derived from the same structural design, facilitating ease of implementation without necessitating specialized architectural modifications. The primary goal of ResNeXt is to effectively manage large input sizes and enhance network accuracy.

This is achieved not by adding more layers, but by increasing the cardinality - the number of parallel paths in the network. This approach effectively boosts performance while maintaining a relatively simple complexity compared to deeper networks

Like ResNet, ResNeXt utilizes residual connections, which help in avoiding the vanishing gradient problem in deep networks. These connections allow the network to learn identity functions, ensuring that deeper models can perform at least as well as shallower ones.

ResNeXt demonstrates increased performance without significantly raising computational complexity. This efficiency stems from its use of shared parameters and grouped convolutions.

The Ikomia API simplifies the process of image classification using ResNeXt, requiring minimal coding effort.

Start by setting up a virtual environment [3] and then install the Ikomia API within it for an optimized workflow:

You can also directly charge the notebook we have prepared.

This article provided an in-depth look at ResNeXt, a highly efficient deep learning model.

We also discussed how the Ikomia API eases the integration of ResNeXt algorithms, reducing the complexity of handling dependencies.

The API optimizes Computer Vision workflows, providing adaptable parameter settings for training and testing stages.

To dive deeper, explore how to train ResNet/ResNeXt models your custom dataset →

[1] Aggregated Residual Transformations for Deep Neural Networks